Oscar Claim Assistant Built On GPT-4

By Benjamin Baker, Evan Poe, and Nazem Aldroubi

Background

Behind each health insurance claim are millions of combinations of variables that take into account a mixture of rules relating to regulations, contracts, and common industry practices among other factors. When a doctor has a question about a particularly complex claim, they contact one of Oscar’s claim specialists, who interprets the claims system’s output. It’s a complex and labor intensive process for health insurers.

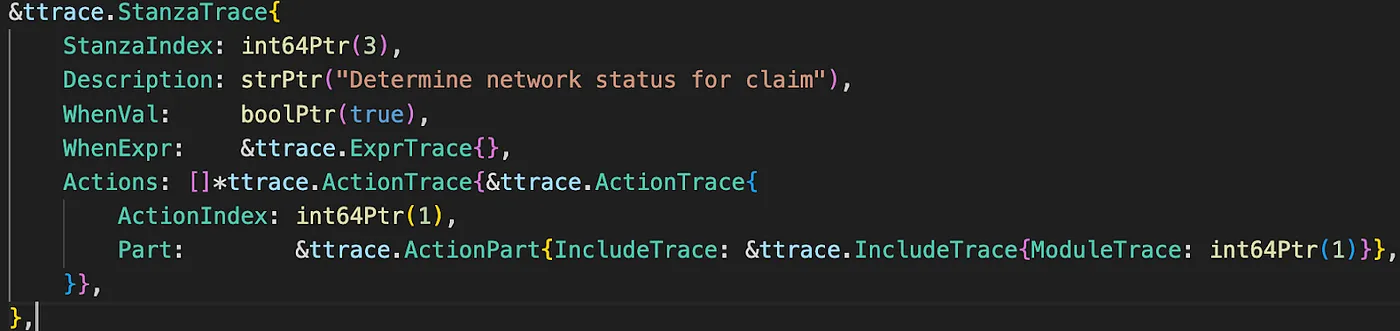

At Oscar, we built a claims system and its underlying data models from the ground up with an emphasis on traceability. The system is based on domain-specific languages that we developed to allow for extremely flexible and arbitrarily complex configuration and scripting. As a result, we have most of the context needed to answer these questions in a single data structure we call the “Claim Trace,” which is a detailed log of every decision made throughout a claim’s processing journey. It looks like a massive stack trace. Our claims processors have necessarily become skilled at navigating this large tree data structure to unearth answers and insights.

An excerpt from the raw data underlying a claim trace

This is a classical use case for LLMs: turn structured data into natural language. Done right, it automates an otherwise complex part of insurance operations, and gets providers paid faster and more accurately.

The Naive Approach

The naive approach, passing full claim stack traces into GPT-4, runs into a simple problem: the trace is several times too large, and quickly exceeds GPT-4’s token limit.

Removing nearly all of the intermediate data, such as arguments, variables, function calls, records retrieved from databases initially yields promising results. GPT-4 seemed to have a surprisingly solid understanding of the trace and how it related to regulations, industry standards, reimbursement policies, etc. (Even though we didn’t train it on any such data). For example:

User: Why was the claim denied?

Claim Assistant: Line 1 has been denied due to a Procedure-to-Procedure (PTP) edit as it is considered mutually exclusive or component services to a more comprehensive procedure on another claim.

While this answer accurately identifies what actions applied to the claim line, it does not provide sufficient detail to assist someone investigating the claim, including the precise service used as justification for the denial. GPT-4 clearly had difficulty identifying the most valuable bits of information with the little context it had in the stripped down trace. As a result, when we have claim experts score the output from LLM-generated claim explanations, humans and machines perform about even for simple claim scenarios, but the machine is unable to solve cases for average and complex scenarios.

Enter Call Chaining

Clearly, GPT requires more context from the stack trace. We introduce a new data structure called a “Skeleton Trace,” which is much like the table of contents in a novel, with an overview of the structure of a story without any of the details. The skeleton trace only includes a tree of which modules ran and which ones invoked each other, but no actual values such as variables and arguments.

Illustration of a skeleton trace

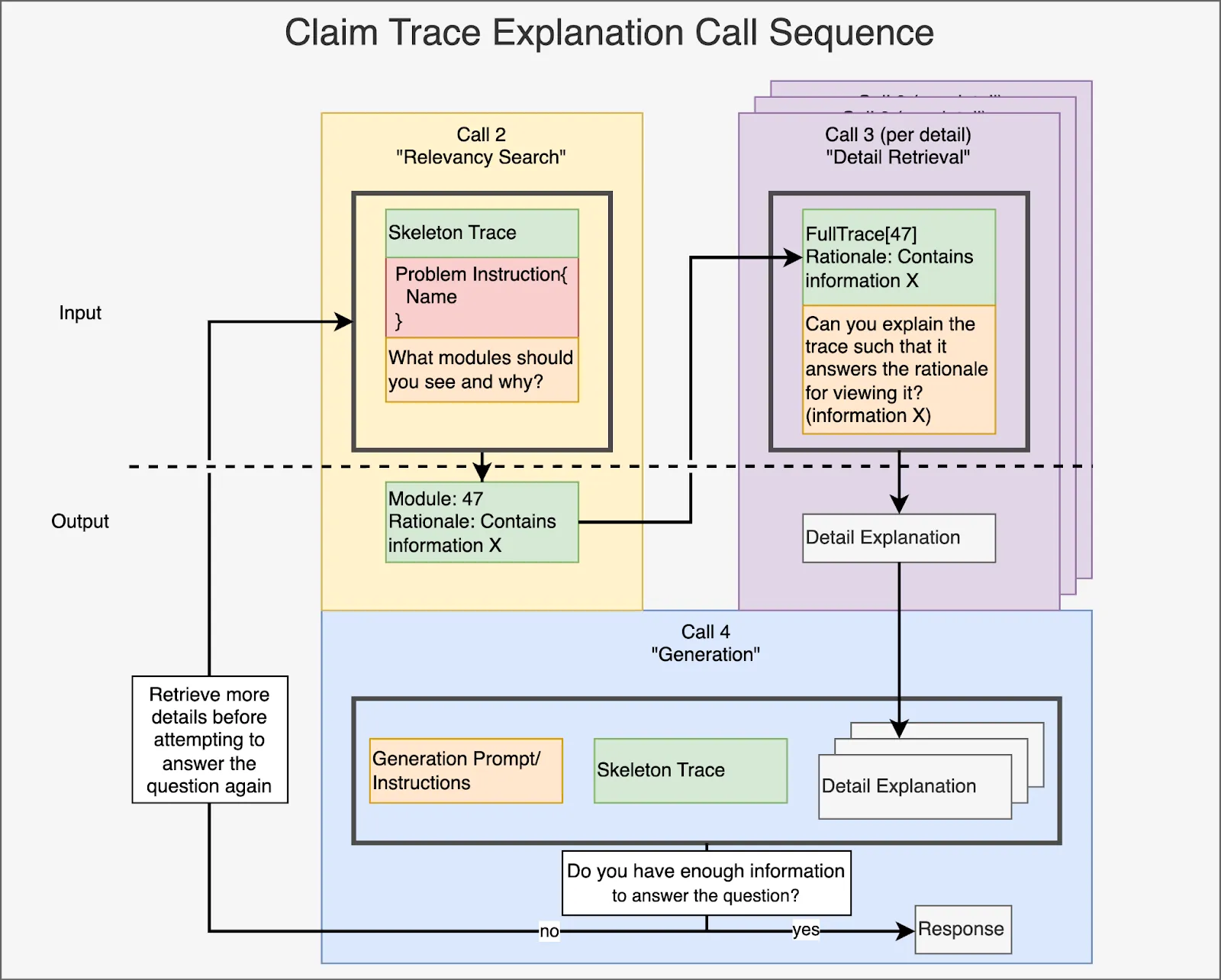

By providing GPT-4 with the skeleton trace, then asking which segments of the trace appear the most relevant, we get an idea which pieces of the full trace are needed to actually answer the question. This reduces the scope from solving one massive problem to solving several smaller problems. This is akin to chain-of-thought with function calling: we enable GPT-4 to request additional details required in the answer, by allowing it to ask the claims system for additional detail from the stack trace iteratively.

Illustration of call chaining

Recall the original example we showed above, and the “naive approach” output:

User: Why was the claim denied?

Claim Assistant: Line 1 has been denied due to a Procedure-to-Procedure (PTP) edit as it is considered mutually exclusive or component services to a more comprehensive procedure on another claim.

Using call chaining we can now ensure the relevant data needed to solve a problem is surfaced to GPT-4, allowing the model to work through the trace until it collects all the relevant information to solve the problem. Giving us an answer that provides far more value to our users:

User: Why was the claim denied?

Response: Claim line 1 has been denied due to a Procedure-to-Procedure (PTP) edit. Specifically, the CPT code 99212 was denied as it is considered mutually exclusive or component services to the more comprehensive procedure identified by CPT code 99202 on claim ABCD1234. Mutually exclusive procedures are those that cannot reasonably be performed at the same anatomic site or during the same patient encounter. Component services are those that are integral parts of a more comprehensive procedure. As per NCCI’s PTP edits, Oscar does not reimburse for mutually exclusive procedures or component services reported alongside comprehensive services.

This approach brought a sharp improvement to our metrics as it was able to solve problems that were too complicated for the naive version. Several buckets of problems reached 100% accuracy, however for a few difficult problem categories, Claim Assistant calculated the correct answer 80% of the time. A number of additional algorithmic improvements are necessary.

Adding Iterative Retrievals

Going back to the novel analogy, what happens if you read the table of contents and realize the information you needed probably couldn’t be solved in a single chapter? You would know that your investigation will be multi-step, and after each step you will have learned something to inform the next.

In Claim Assistant’s case, we observed situations where it initially failed to identify the right branches of the skeleton trace. In these circumstances, it was far more likely to produce incorrect answers. But was this because it truly didn’t know how to solve the problem or it simply didn’t grasp how to execute a complex, multi-step investigation? To test the theory, we took the final generation prompt but instead of asking GPT-4 to generate a response, we asked it if it felt confident in its answer. It turned out that the GPT-4 could consistently identify certain situations where it needed more information to solve the problems it struggled with.

We use that certainty assessment to determine if more details need to be retrieved. This enables Claim Assistant to engage in more multi-step problem solving.

Illustration of iterative retrieval

For those problems where Claim Assistant lacks certainty, this gives it a chance to pull in more data, which increases the odds that it solves the problem the second or third time around. This approach also means that more difficult problems will take longer to solve.

While testing this approach, we have observed examples where it looked for the details in some part of the trace and then learned it needed more information from another part of the trace. We’re optimistic about expanding this pattern to enable even greater multi-step problem solving.

What’s Next?

The beauty of owning all of our core admin systems ourselves is that we can very quickly go from experimentation to production. This methodology already has a landing spot in the user interface for our claims operators.

As we look ahead, we see LLM implementations like the Claim Assistant evolving into a core piece of an intelligent, self-learning claims system. While we have initially focused on descriptive analysis that tells the story of a claim, continued experimentation presents the opportunity to unlock even more advanced use cases like diagnostic insights and predictive analyses.